Signal Processing in Real-time: Bridging the Gap Between Ideal Sampling and Real-World Data Streams

Understand the complexities of performing signal processing in realtime. Learn how to build robust signal processing systems that can handle irregular data streams.

Signal processing is crucial for extracting valuable insights from raw data. It is used across a range of data stream processing applications, such as sensor data, IoT data analytics and financial time series. The typical signal processing setup is built on the assumption that data is regularly sampled at precise intervals. However, realtime data processing does not meet this assumption: signals are collected from disparate, decoupled data sources leading to irregular data sampling and out-of-order data points. In this less predictable environment, traditional signal processing algorithms designed for regularly sampled data may not suffice. You will need a realtime signal processing system that is able to accommodate out-of-order and irregularly sampled data points.

This article will explore signal processing fundamentals, how they differ in context from real-world data stream processing, and how Pathway tackles this challenge to unlock effective digital signal processing in asynchronous and less structured scenarios. It is the foundation for our signal-processing tutorials on applying a Gaussian filter on out-of-order data points and how to combine data streams with upsampling.

What is Signal Processing?

Signal processing is a broad field that encompasses a wide range of techniques used to manipulate signals, typically in the time or frequency domains. Usually, signals are considered time-varying data, where the time axis represents the progression of discrete samples.

Common Operations in Signal Processing

There are a number of common operations in signal processing:

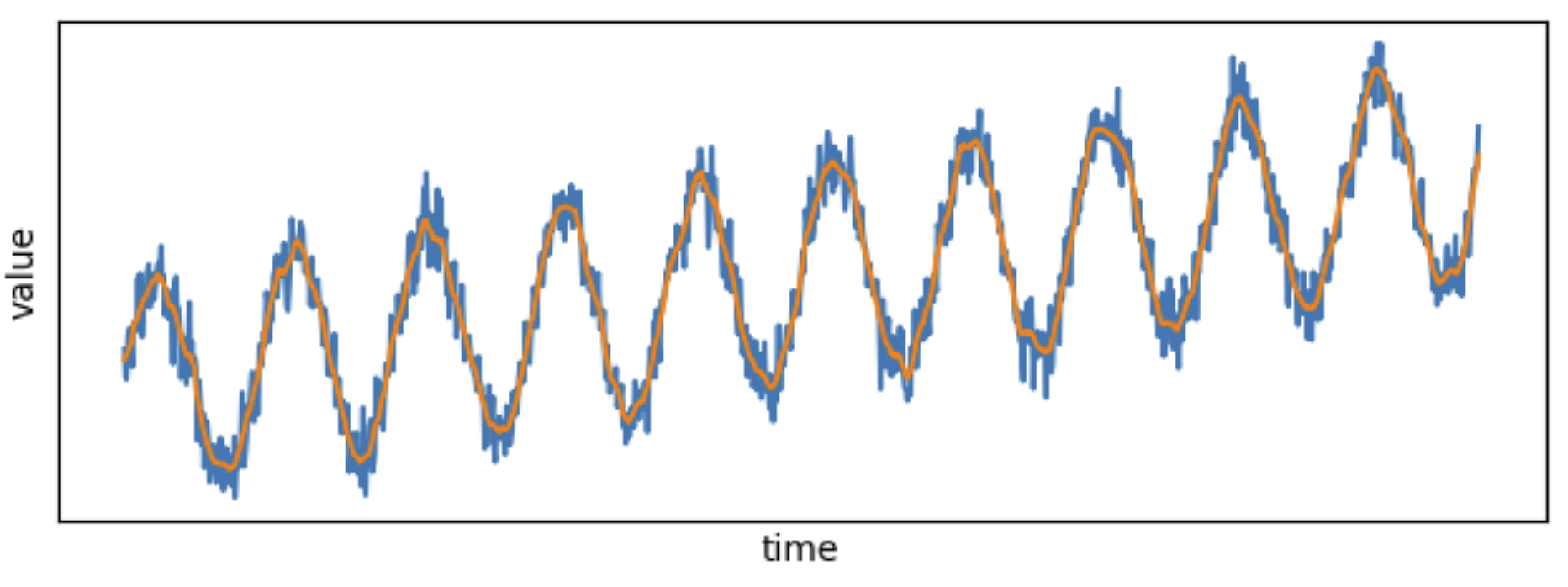

- Smoothing and Filtering: Smoothing and filtering in data processing are used to reduce noise, remove unwanted variations, and reveal underlying patterns in the data for improved analysis and interpretation. Smoothing techniques reduce noise and irregularities in a signal, making it easier to analyze trends and patterns.

- Feature Extraction: Feature extraction aims to identify and extract relevant information or features from a signal, which can be used for further analysis or classification.

- Transformations: Signal transformations, such as Fourier transforms, convert signals from the time domain to the frequency domain and vice versa, revealing different characteristics.

What is Digital Signal Processing?

Digital signal processing is a subfield of signal processing working with digital signals (as opposed to analog signal processing, which deals with analog signals).

Digital Signal Processing vs. Data Stream Processing

In digital signal processing, signals are typically represented by discrete samples taken at regular intervals. The typical DSP setup is highly concentrated and coordinated: a dedicated process meticulously samples, processes, and sends data using a global clock for synchronization. Everything follows a regular and precise timing pattern: think of an audio processor or broadband modem in the low layers. These systems operate under the common assumption that the sampling rates are perfect and consistent: with a sample every second, the ith data point has been sampled at second i precisely. This synchrony and absence of failure makes it possible to wait for all the points to be gathered before any computation is performed.

In many real-world data processing applications, this idealized scenario is unrealistic.

First, the sensors themselves are not perfect. Not only the captors may be faulty, including the internal clock slowly drifting apart from the "real" time, but data may be sampled at irregular intervals, leading to variations in the time between samples. The resulting data is noisy and contains gaps.

The data collection adds another layer of imperfection. Data packets may be lost or delayed, which means we must deal with incomplete and out-of-order data streams.

In complex systems, which integrate data samples from several sources data misalignment is as serious as signal noise! This challenge requires a different approach to data processing compared to idealized signal processing, where the focus is on precise time alignment.

Signal Processing with Pathway

Because they assume a perfect sampling, standard DSP systems do not support the addition of late data points. Out-of-order and irregularly sampled points will be buffered until all points are received, delaying the response until then; there is no way to push a late point through the system.

Pathway is built to work with real-world and realtime sensor data. Its incremental engine enables you to build reactive data products that will automatically update themselves whenever the out-of-order data points arrive. As a developer, you only have to describe the logic once and Pathway will handle the data updates under the hood. In practice, this means that you can manipulate your streaming data as if it were static, i.e. as if all the data is already gathered. You can write your processing logic without thinking about the order in which data will arrive. Pathway will handle any irregular or out-of-order data by updating its results automatically whenever these points arrive.

Building Realtime Signal Processing Pipelines with Pathway

Now that you understand the complexities of working with realtime signal processing, you may want to get some hands-on experience by working through one of our signal processing tutorials: